One day, terror struck; early spring of 1975 I was invited to Caltech to give a talk.

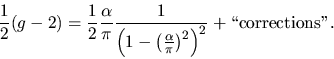

I could go to any other place and say that Kinoshita and I have computed

thousands of diagrams and that the answer is, well, the answer is:

Now, you probably do not know how stupid the quantum field theory is in practice. What is done (or at least was done, before the field theorists left this planet for pastures beyond the Planck length) is:

And indeed; when the diagrams that we had computed were grouped into

gauge invariant subsets, a rather surprising thing happens; while the

finite part of each Feynman diagram is of order of 10 to 100, every subset

adds up to approximately

I prepared the talk for Feynman, but was fated to arrive from SLAC to Caltech

precisely five

days after the discovery of the ![]() particle. I had to give an

impromptu irrelevant talk about what would the total

particle. I had to give an

impromptu irrelevant talk about what would the total ![]() cross-section had looked

like if

cross-section had looked

like if ![]() were a heavy vector boson, and had only 5 minutes for my

conjecture about the finiteness of gauge theories.

Feynman liked it

and gave me some sage advice.

were a heavy vector boson, and had only 5 minutes for my

conjecture about the finiteness of gauge theories.

Feynman liked it

and gave me some sage advice.

The eighth order contribution to the electron g - 2

from diagrams without any fermion loop is

-1.9344(1370) as of 1990 (in my book on QED).

So, unfortunately, it appears that it does not

conform to your pet theory.

Aoyama, Hayakawa, Kinoshita, and Nio (Jul 2012) heroic calculation has progressed to the tenth order. They use the self-energy method for evaluating (g-2), described in the Cvitanović and Kinoshita tryptich of sixth-order papers, so they are not able to separate out the minimal gauge sets that I use to argue that the mass-shell QED is convergent. They report for the “quenched” set (no lepton loops, only virtual photons):

A(8)1,V ≈ -2 , while my guess based on 6 gauge sets would be A(8)1,V = 0, which is pretty darn close, considering this is a sum of 518 diagrams and a zillion counterterms.

A(10)1,V ≈ 10, while I predict, based on 9 gauge sets, that A(10)1,V = 3/2, which is extremely good for a sum of 6,354 diagrams! Even the sign comes out right :)

Suppose each diagram contributed ≈ ± 1 (actual numbers are more like ≈ ± 100) and they were statistically uncorrelated. Then one would estimate A(10)1,V ≈ ± 80. Cancelations that lead to A(10)1,V ≈ 10 are amazing.

Of course, asymptotic series can be very good, and all of this is a wild guesswork

and means nothing until there is a method to estimate these

sums. One thing that would make the finitness conjecture

more convincing would be to check how close individual gauge sets

are to ± 1/2, but I do not think that data is available - does anyone evaluate

individual vertex diagrams, rather than the self-energy diagrams?

I've now reread all the relevant literature, and see several ways ways forward. It is a longer story, for an overview, please see my blog.